NVIDIA's next AI steps: An ARM deal and a new 'personal supercomputer'

Now it's targeting deep learning in mobile chips.

Soon you won't need one of NVIDIA's tiny Jetson systems if you want to tap into its AI smarts for smaller devices. At its GPU Technology Conference (GTC) today, the company announced it'll be bringing its open source Deep Learning Architecture (NVDLA) over to ARM's upcoming Project Trillium platform, which is focused on mobile AI. Specifically, NVDLA will help developers by accelerating inferencing, the processing of using trained neural networks to perform specific tasks.

While it's a surprising move for NVIDIA, which typically relies on its own closed platforms, it makes a lot of sense. NVIDIA already relies on ARM designs for its Jetson and Tegra systems. If it's going to make any sort of impact on the mobile and IoT world, it needs to work together with ARM, who dominates those arenas. And ARM could use NVIDIA's technology to prove just how capable its upcoming chip platform will be.

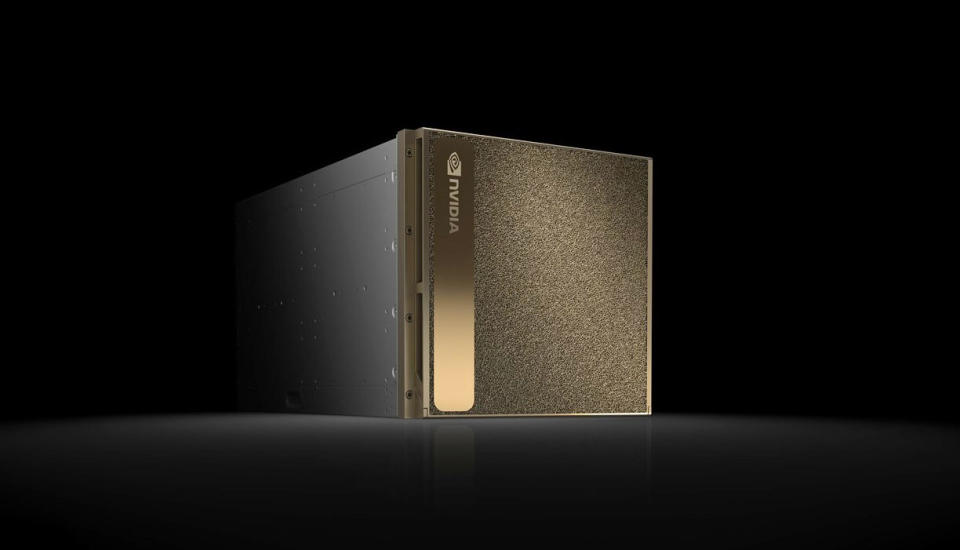

The company isn't just thinking small this year though. NVIDIA also unveiled the DGX-2, the next version of its "personal AI supercomputer." It's about 10 times faster than the previous system, the $149,000 DGX-1, which was powered by its first Volta GPU, the Tesla V100. Notably, the DGX-2 is the first server able to deliver more than two petaflops worth of power. That's mostly due to the revamped V100 GPU, which now sports 32GB of memory. The server is powered by 16 of those cards, all strung together by the company's NVSwitch technology.

At 350 pounds, NVIDIA is also calling it the world's largest GPU (sure, technically). The DGX-2 will run you a cool $399,000 when it's released in the third quarter.