Gboard studies your behavior without sending details to Google

The search giant is testing it on Android first to improve the mobile keyboard's suggestions.

Last June, Apple started testing differential privacy, a method to gather behavior data while anonymizing user identities. The company expected it would improve QuickType predictions. Google has just begun trying out a similar method with Gboard to improve its automatic suggestions, but has taken a different approach to ensuring privacy: Keeping data on the device, not uploading it to the cloud.

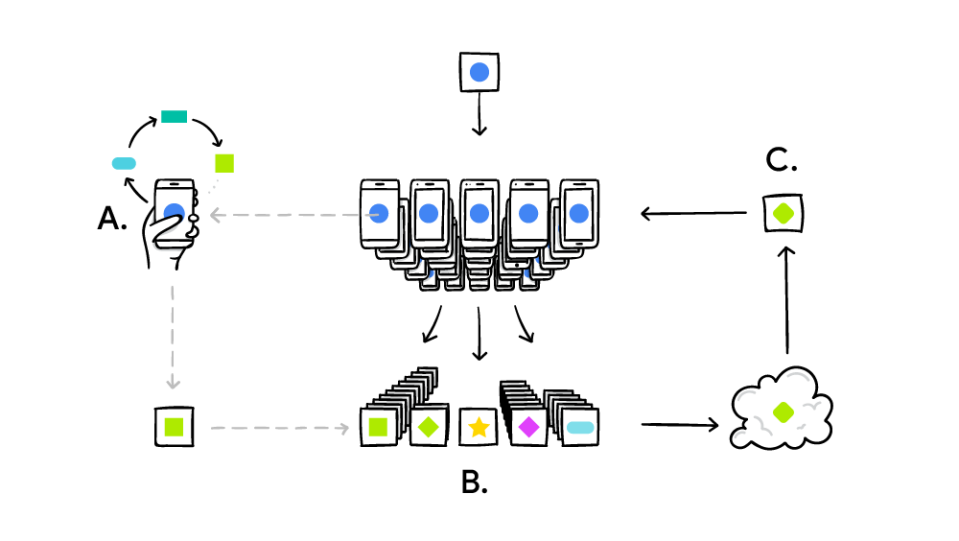

It does this by downloading the latest text prediction model to your device, improves it by learning from behavior data on your phone and then sending a summary of the changes to the cloud. This is combined with all the other single-device updates and a new shared prediction model is created to download and start the process all over. Google's research scientists are calling this method 'Federated Learning.'

Keeping the learning process local on your device by uploading small summaries to servers instead of large data batches reduces both power drain and bandwidth required. That might make it less of a strain on devices and cloud services than Apple's technique, which adds "mathematical noise" to user data in order to protect identities. Google's testing Federated Learning out first on Android's keyboard, Gboard, to improve its word suggestions. In the future, it might be used to improve each user's own personal language models on Gboard, as well as adjust photo rankings based on which types people look at, share and/or delete.